MuRAL:

A Multi-Resident Ambient Sensor Dataset

Annotated with Natural Language for Activities of Daily Living

Download MuRAL.zip

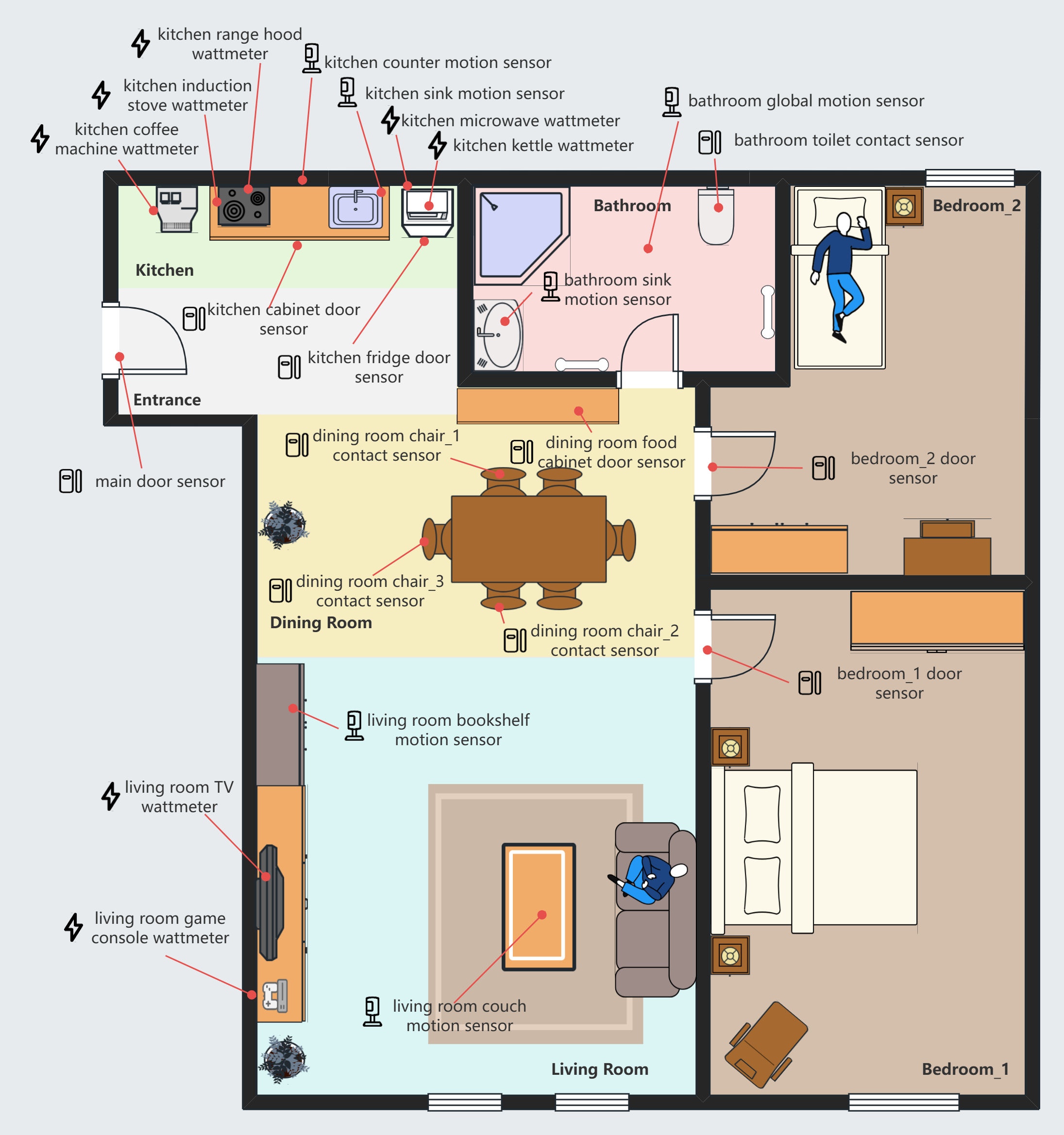

Recent progress in Large Language Models (LLMs) has enabled advanced reasoning and zero-shot recognition for human activity understanding with ambient sensor data. However, widely used multi-resident datasets such as CASAS, ARAS, and MARBLE lack natural language context and fine-grained annotation, limiting the full exploitation of LLM capabilities in realistic smart environments. To address this gap, we present MuRAL (Multi-Resident Ambient sensor dataset with natural Language), comprising over 21 hours of multi-user sensor data from 21 sessions in a smart home. MuRAL uniquely features detailed natural language descriptions, explicit resident identities, and rich activity labels, all situated in complex, dynamic, multi-resident scenarios. We benchmark state-of-the-art LLMs on MuRAL for three core tasks: subject assignment, action description, and activity classification. Results show that current LLMs still face major challenges on MuRAL, especially in maintaining accurate resident assignment over long sequences, generating precise action descriptions, and effectively integrating context for activity prediction.